Optimization for Beginners

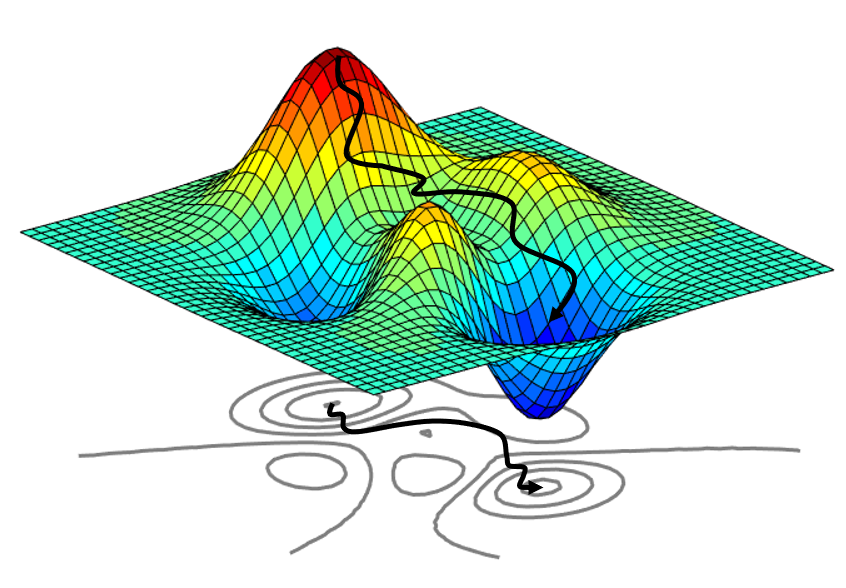

Imagine you’re on a treasure hunt, navigating a vast landscape filled with hills and valleys. Your goal? To find the lowest valley—the treasure. But how do you efficiently climb the terrain, avoiding unnecessary detours and dead-ends? Enter Lipschitz optimization, the compass for your mathematical treasure hunt. In the world of optimization, Lipschitz continuity acts as a guide, ensuring that the journey to the treasure is smooth and predictable. In this beginner-friendly exploration, we’ll unravel what Lipschitz optimization means, define it a little more formally and understand its significance in machine learning.

Lipschitz Continuity

In this landscape, Lipschitz continuity ensures that the slopes don’t change too abruptly. In other words, it puts a cap on how fast the function can change as you move from one point to another. This smoothness property is crucial in optimization problems, where we aim to find the minimum or maximum of a function (without getting lost in the way).

For a function to be Lipschitz continuous, there exists a Lipschitz constant—a positive number denoted as “L.” This constant bounds the ratio of the change in the function’s values to the distance between points. Simply put, no matter where you are on the landscape, the function won’t surprise you too much as you take steps.

The Lipschitz constant, L, provides an upper bound for this rate of change: [ |f(x) - f(y)| \leq L \cdot |x - y| ] . There are a huge number of reasons this could be useful, and I’ll provide illustrative examples showing how it can be used in machine learning.

-

Optimization:

One of the problems you have in optimization that showing convergence on an arbitrary function is really hard. A reason for that is, that we use local information (function value, gradient, hessian) to make an educated guess about how the function will behave at a nearby point. We say that using sentences like “if we take a small enough step along the negative gradient direction, the function value will decrease”. Great, but how long is “small enough” and how can we quantify that?

During each iteration of an optimization algorithm, the step size is typically chosen to balance the need for convergence speed and stability. In the context of Lipschitz continuity, one might choose the step size in a way that takes into account the Lipschitz constant L.

A common approach is to use a step size that is inversely proportional to L. Intuitively, this ensures that the step size is smaller when the function changes more rapidly, preventing overshooting.

For example, in gradient descent, the step size (αα) might be chosen as: α=1/L.

-

Robustness to noisy data

In the presence of noisy or outliers in the training data, Lipschitz continuity provides a certain degree of robustness. The Lipschitz constant limits the impact of individual data points on the overall optimization process, making the algorithm more resilient to noise and preventing overfitting.

-

Parallelization: Lipschitz continuity also facilitates the parallelization of optimization algorithms. The bounded gradient allows for efficient distribution of computations, enabling parallel updates of model parameters across different computing resources.

In summary, finding a Lipschitz constant helps optimize loss functions in machine learning by providing stability, controlling step sizes, enabling efficient hyperparameter tuning, enhancing robustness to noise, promoting generalization, and facilitating parallelization of optimization algorithms.